The world’s most popular chatbot manufacturer, OpenAI, is considering various strategies to expand in the field of artificial intelligence (AI) chips. According to CEO Sam Altman, this includes the possibility of acquiring an AI chip manufacturer or developing their own.

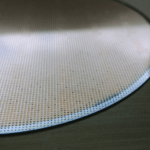

Currently, OpenAI, like most of its competitors, relies on hardware based on graphics processors (GPUs) to develop its models such as ChatGPT, GPT-4, and DALL-E 3. Graphics processors are suitable for training the most capable modern AI as they can perform many parallel computations.

However, the boom in generative artificial intelligence has led to enormous strain on the supply chain for graphics processors. Microsoft is facing a shortage of server hardware needed for AI work, which could lead to service disruptions. In addition, Nvidia’s best AI chips are sold out until 2024.

OpenAI’s creation of its own chips could lead to significant savings, as several analyses show that if requests for ChatGPT reach one-tenth the scale of Google Search, about $48.1 billion would be needed for chips and about $16 billion for their annual maintenance.

OpenAI is not the only company striving to create its own AI chips. Google already has a processor – TPU (short for “tensor processing unit”), Amazon offers its own chips to AWS customers for both training (Trainium) and inference (Inferentia), and Microsoft is working with AMD to develop an internal AI chip called Athena, which OpenAI is testing.