South Korean companies Samsung and Google-like search service Naver Cloud presented a partner report on the performance of their joint AI chips compared to the products of Western competitors.

Comparative tests of the energy efficiency of AI chips from Samsung and Naver were carried out with analogues from Nvidia and Google. Tests were carried out on the large Meta AI LLaMa language model at equal speeds. It turned out that Korean chips are 8 times more energy efficient than Western semiconductors.

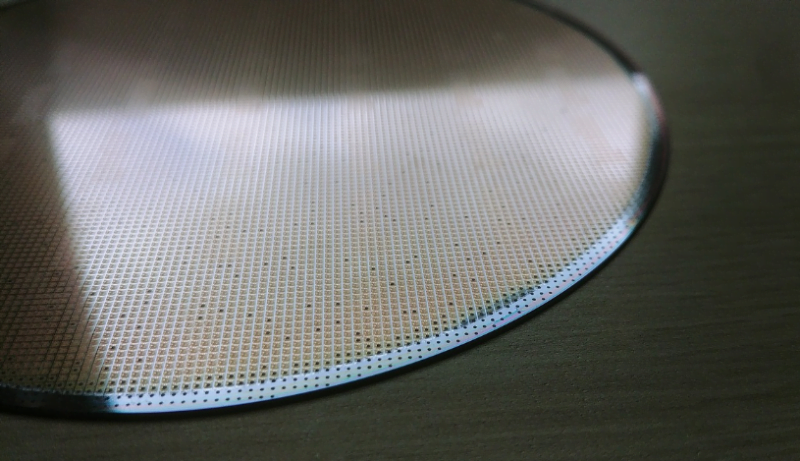

According to representatives of partner companies, high energy efficiency is achieved partly due to the fact that DRAM memory with reduced power consumption is integrated into the chip (LPDDR – the exact type of memory is not specified). However, little detailed information has been provided about how LPDDR works in this new chip and what exactly could lead to such a significant performance boost.

Naver’s hyperscale language model HyperCLOVA also plays a key role in achieving high efficiency. Naver says it is continuing to improve the model, hoping to achieve even greater efficiency by improving compression algorithms and simplifying the model. HyperCLOVA currently has over 200 billion parameters.