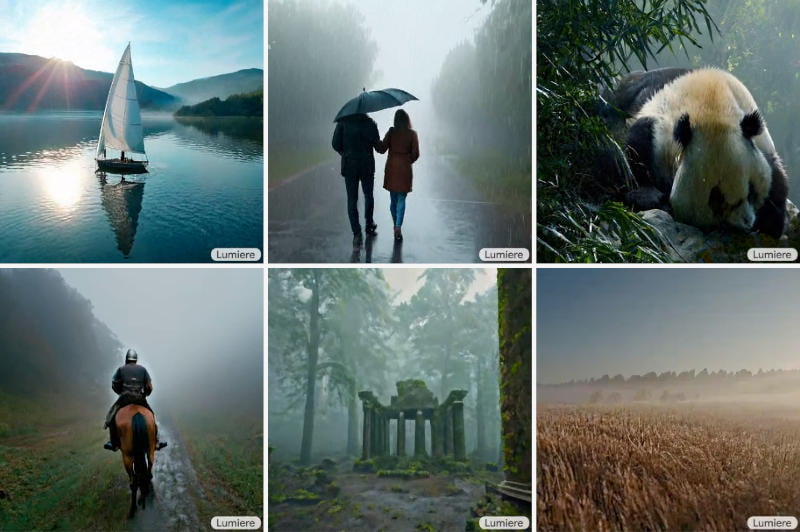

Google demonstrated the operation of the Lumiere spatiotemporal diffusion model. The new AI tool can create amazingly realistic videos up to five seconds long. The neural network animates still images or just parts of them in response to text prompts in natural language.

The most important difference between Google Lumiere and existing analogues is the unique architecture of the model – the video for its entire duration is generated in one pass. Other models work on a different principle: they generate several keyframes and then interpolate over time, which makes the generated movie difficult to be consistent. Lumiere works in several modes, for example, it converts text to video, converts static images into dynamic ones, creates videos in a given style based on a sample, allows you to edit an existing video using written prompts, animates certain areas of a static image, or edits a video fragmentarily – for example, it can change an item of clothing on a person.

At the moment this is just a research project. Therefore, Google does not need to aggressively neutralize the system to respect copyright, privacy and security, and prevent hate speech and nudity.